| Age | Commit message (Collapse) | Author | Files | Lines |

|---|

|

Summary:

Fixes https://github.com/pytorch/pytorch/issues/18983

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18988

Differential Revision: D14820042

Pulled By: soumith

fbshipit-source-id: 356169f554a42303b266d700d3379a5288f9671d

|

|

Summary:

Fixes: https://github.com/pytorch/pytorch/issues/14093

cc: SsnL

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18395

Differential Revision: D14599509

Pulled By: umanwizard

fbshipit-source-id: 2391a1cc135fe5bab38475f1c8ed87c4a96222f3

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18883

Differential Revision: D14793365

Pulled By: ezyang

fbshipit-source-id: c1b46c98e3319badec3e0e772d0ddea24cbf9c89

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18802

Differential Revision: D14781874

Pulled By: ezyang

fbshipit-source-id: 0f94c40bd84c84558ea3329117580f6c749c019f

|

|

Summary:

Per our offline discussion, allow Tensors, ints, and floats to be casted to be bool when used in a conditional

Fix for https://github.com/pytorch/pytorch/issues/18381

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18755

Reviewed By: driazati

Differential Revision: D14752476

Pulled By: eellison

fbshipit-source-id: 149960c92afcf7e4cc4997bccc57f4e911118ff1

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18230

Implementing minimum qtensor API to unblock other workstreams in quantization

Changes:

- Added Quantizer which represents different quantization schemes

- Added qint8 as a data type for QTensor

- Added a new ScalarType QInt8

- Added QTensorImpl for QTensor

- Added following user facing APIs

- quantize_linear(scale, zero_point)

- dequantize()

- q_scale()

- q_zero_point()

Reviewed By: dzhulgakov

Differential Revision: D14524641

fbshipit-source-id: c1c0ae0978fb500d47cdb23fb15b747773429e6c

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18628

ghimport-source-id: d94b81a6f303883d97beaae25344fd591e13ce52

Stack from [ghstack](https://github.com/ezyang/ghstack):

* #18629 Provide flake8 install instructions.

* **#18628 Delete duplicated technical content from contribution_guide.rst**

There's useful guide in contributing_guide.rst, but the

technical bits were straight up copy-pasted from CONTRIBUTING.md,

and I don't think it makes sense to break the CONTRIBUTING.md

link. Instead, I deleted the duplicate bits and added a cross

reference to the rst document.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14701003

fbshipit-source-id: 3bbb102fae225cbda27628a59138bba769bfa288

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18598

ghimport-source-id: c74597e5e7437e94a43c163cee0639b20d0d0c6a

Stack from [ghstack](https://github.com/ezyang/ghstack):

* **#18598 Turn on F401: Unused import warning.**

This was requested by someone at Facebook; this lint is turned

on for Facebook by default. "Sure, why not."

I had to noqa a number of imports in __init__. Hypothetically

we're supposed to use __all__ in this case, but I was too lazy

to fix it. Left for future work.

Be careful! flake8-2 and flake8-3 behave differently with

respect to import resolution for # type: comments. flake8-3 will

report an import unused; flake8-2 will not. For now, I just

noqa'd all these sites.

All the changes were done by hand.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14687478

fbshipit-source-id: 30d532381e914091aadfa0d2a5a89404819663e3

|

|

Summary:

Changelog:

- Renames `btriunpack` to `lu_unpack` to remain consistent with the `lu` function interface.

- Rename all relevant tests, fix callsites

- Create a tentative alias for `lu_unpack` under the name `btriunpack` and add a deprecation warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18529

Differential Revision: D14683161

Pulled By: soumith

fbshipit-source-id: 994287eaa15c50fd74c2f1c7646edfc61e8099b1

|

|

Summary:

Changelog:

- Renames `btrifact` and `btrifact_with_info` to `lu`to remain consistent with other factorization methods (`qr` and `svd`).

- Now, we will only have one function and methods named `lu`, which performs `lu` decomposition. This function takes a get_infos kwarg, which when set to True includes a infos tensor in the tuple.

- Rename all tests, fix callsites

- Create a tentative alias for `lu` under the name `btrifact` and `btrifact_with_info`, and add a deprecation warning to not promote usage.

- Add the single batch version for `lu` so that users don't have to unsqueeze and squeeze for a single square matrix (see changes in determinant computation in `LinearAlgebra.cpp`)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18435

Differential Revision: D14680352

Pulled By: soumith

fbshipit-source-id: af58dfc11fa53d9e8e0318c720beaf5502978cd8

|

|

Summary:

This implements a cyclical learning rate (CLR) schedule with an optional inverse cyclical momentum. More info about CLR: https://github.com/bckenstler/CLR

This is finishing what #2016 started. Resolves #1909.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18001

Differential Revision: D14451845

Pulled By: sampepose

fbshipit-source-id: 8f682e0c3dee3a73bd2b14cc93fcf5f0e836b8c9

|

|

Summary:

There are a number of pages in the docs that serve insecure content. AFAICT this is the sole source of that.

I wasn't sure if docs get regenerated for old versions as part of the automation, or if those would need to be manually done.

cf. https://github.com/pytorch/pytorch.github.io/pull/177

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18508

Differential Revision: D14645665

Pulled By: zpao

fbshipit-source-id: 003563b06048485d4f539feb1675fc80bab47c1b

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18507

ghimport-source-id: 1c3642befad2da78a7e5f39d6d58732b85c76267

Stack from [ghstack](https://github.com/ezyang/ghstack):

* **#18507 Upgrade flake8-bugbear to master, fix the new lints.**

It turns out Facebobok is internally using the unreleased master

flake8-bugbear, so upgrading it grabs a few more lints that Phabricator

was complaining about but we didn't get in open source.

A few of the getattr sites that I fixed look very suspicious (they're

written as if Python were a lazy language), but I didn't look more

closely into the matter.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Differential Revision: D14633682

fbshipit-source-id: fc3f97c87dca40bbda943a1d1061953490dbacf8

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18493

Differential Revision: D14634677

Pulled By: jamesr66a

fbshipit-source-id: 9ee065f6ce4218f725b93deb4c64b4ef55926145

|

|

enforce tensors.rst no longer miss anything (#16057)

Summary:

This depend on https://github.com/pytorch/pytorch/pull/16039

This prevent people (reviewer, PR author) from forgetting adding things to `tensors.rst`.

When something new is added to `_tensor_doc.py` or `tensor.py` but intentionally not in `tensors.rst`, people should manually whitelist it in `test_docs_coverage.py`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16057

Differential Revision: D14619550

Pulled By: ezyang

fbshipit-source-id: e1c6dd6761142e2e48ec499e118df399e3949fcc

|

|

Summary:

This PR adds a Global Site Tag to the site.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17690

Differential Revision: D14620816

Pulled By: zou3519

fbshipit-source-id: c02407881ce08340289123f5508f92381744e8e3

|

|

Summary:

`SobolEngine` is a quasi-random sampler used to sample points evenly between [0,1]. Here we use direction numbers to generate these samples. The maximum supported dimension for the sampler is 1111.

Documentation has been added, tests have been added based on Balandat 's references. The implementation is an optimized / tensor-ized implementation of Balandat 's implementation in Cython as provided in #9332.

This closes #9332 .

cc: soumith Balandat

Pull Request resolved: https://github.com/pytorch/pytorch/pull/10505

Reviewed By: zou3519

Differential Revision: D9330179

Pulled By: ezyang

fbshipit-source-id: 01d5588e765b33b06febe99348f14d1e7fe8e55d

|

|

Summary:

This is to fix #16141 and similar issues.

The idea is to track a reference to every shared CUDA Storage and deallocate memory only after a consumer process deallocates received Storage.

ezyang Done with cleanup. Same (insignificantly better) performance as in file-per-share solution, but handles millions of shared tensors easily. Note [ ] documentation in progress.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16854

Differential Revision: D13994490

Pulled By: VitalyFedyunin

fbshipit-source-id: 565148ec3ac4fafb32d37fde0486b325bed6fbd1

|

|

Summary:

* Adds more headers for easier scanning

* Adds some line breaks so things are displayed correctly

* Minor copy/spelling stuff

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18234

Reviewed By: ezyang

Differential Revision: D14567737

Pulled By: driazati

fbshipit-source-id: 046d991f7aab8e00e9887edb745968cb79a29441

|

|

Summary:

Changelog:

- Renames `trtrs` to `triangular_solve` to remain consistent with `cholesky_solve` and `solve`.

- Rename all tests, fix callsites

- Create a tentative alias for `triangular_solve` under the name `trtrs`, and add a deprecation warning to not promote usage.

- Move `isnan` to _torch_docs.py

- Remove unnecessary imports

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18213

Differential Revision: D14566902

Pulled By: ezyang

fbshipit-source-id: 544f57c29477df391bacd5de700bed1add456d3f

|

|

Summary:

Fixes Typo and a Link in the `docs/source/community/contribution_guide.rst`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18237

Differential Revision: D14566907

Pulled By: ezyang

fbshipit-source-id: 3a75797ab6b27d28dd5566d9b189d80395024eaf

|

|

Summary:

Changelog:

- Renames `gesv` to `solve` to remain consistent with `cholesky_solve`.

- Rename all tests, fix callsites

- Create a tentative alias for `solve` under the name `gesv`, and add a deprecated warning to not promote usage.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/18060

Differential Revision: D14503117

Pulled By: zou3519

fbshipit-source-id: 99c16d94e5970a19d7584b5915f051c030d49ff5

|

|

Summary:

Fix a very common typo in my name.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17949

Differential Revision: D14475162

Pulled By: ezyang

fbshipit-source-id: 91c2c364c56ecbbda0bd530e806a821107881480

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/18008

Differential Revision: D14455117

Pulled By: soumith

fbshipit-source-id: 29d9a2e0b36d72bece0bb1870bbdc740c4d1f9d6

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17973

Differential Revision: D14438725

Pulled By: zou3519

fbshipit-source-id: 30a5485b508b4ae028057e0b66a8abb2b163d66b

|

|

Summary: Adding new documents to the PyTorch website to describe how PyTorch is governed, how to contribute to the project, and lists persons of interest.

Reviewed By: orionr

Differential Revision: D14394573

fbshipit-source-id: ad98b807850c51de0b741e3acbbc3c699e97b27f

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17851

Differential Revision: D14401791

Pulled By: soumith

fbshipit-source-id: ed6d64d6f5985e7ce76dca1e9e376782736b90f9

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17706

Differential Revision: D14346482

Pulled By: ezyang

fbshipit-source-id: 7c85e51c701f6c0947ad324ef19fafda40ae1cb9

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17705

Differential Revision: D14338380

Pulled By: ailzhang

fbshipit-source-id: d53eece30bede88a642e718ee6f829ba29c7d1c4

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17607

Differential Revision: D14281291

Pulled By: yf225

fbshipit-source-id: 51209c5540932871e45e54ba6d61b3b7d264aa8c

|

|

Summary:

as title

Pull Request resolved: https://github.com/pytorch/pytorch/pull/17476

Differential Revision: D14218312

Pulled By: suo

fbshipit-source-id: 64df096a3431a6f25cd2373f0959d415591fed15

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/17421

Differential Revision: D14194877

Pulled By: soumith

fbshipit-source-id: 6173835d833ce9e9c02ac7bd507cd424a20f2738

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/16640

Differential Revision: D14178270

Pulled By: driazati

fbshipit-source-id: 581040abd0b7f8636c53fd97c7365df99a2446cf

|

|

Summary:

Based on https://github.com/pytorch/pytorch/pull/12413, with the following additional changes:

- Inside `native_functions.yml` move those outplace operators right next to everyone's corresponding inplace operators for convenience of checking if they match when reviewing

- `matches_jit_signature: True` for them

- Add missing `scatter` with Scalar source

- Add missing `masked_fill` and `index_fill` with Tensor source.

- Add missing test for `scatter` with Scalar source

- Add missing test for `masked_fill` and `index_fill` with Tensor source by checking the gradient w.r.t source

- Add missing docs to `tensor.rst`

Differential Revision: D14069925

Pulled By: ezyang

fbshipit-source-id: bb3f0cb51cf6b756788dc4955667fead6e8796e5

|

|

Summary:

one_hot docs is missing [here](https://pytorch.org/docs/master/nn.html#one-hot).

I dug around and could not find a way to get this working properly.

Differential Revision: D14104414

Pulled By: zou3519

fbshipit-source-id: 3f45c8a0878409d218da167f13b253772f5cc963

|

|

longer miss anything (#16039)

Summary:

This prevent people (reviewer, PR author) from forgetting adding things to `torch.rst`.

When something new is added to `_torch_doc.py` or `functional.py` but intentionally not in `torch.rst`, people should manually whitelist it in `test_docs_coverage.py`.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16039

Differential Revision: D14070903

Pulled By: ezyang

fbshipit-source-id: 60f2a42eb5efe81be073ed64e54525d143eb643e

|

|

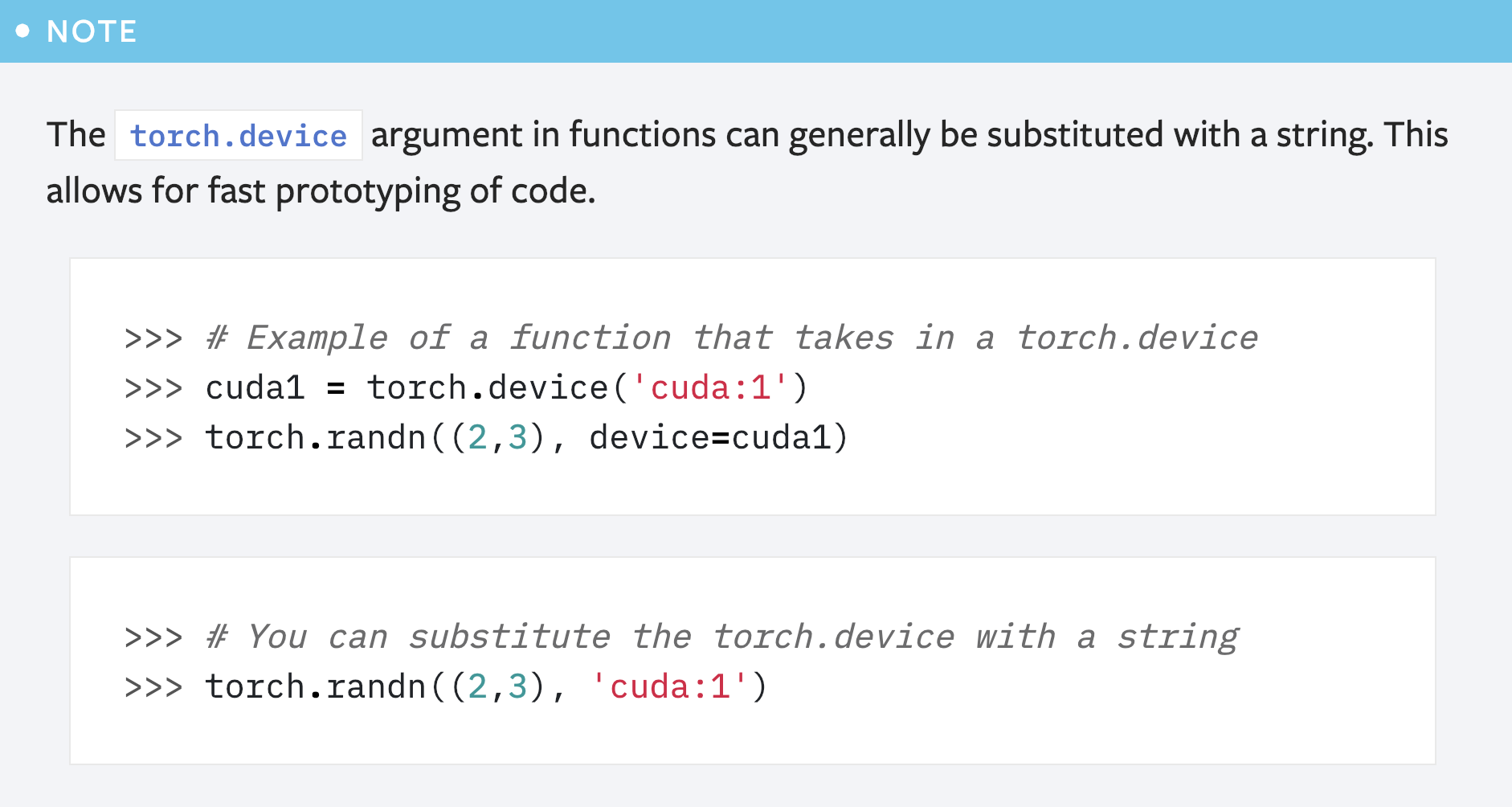

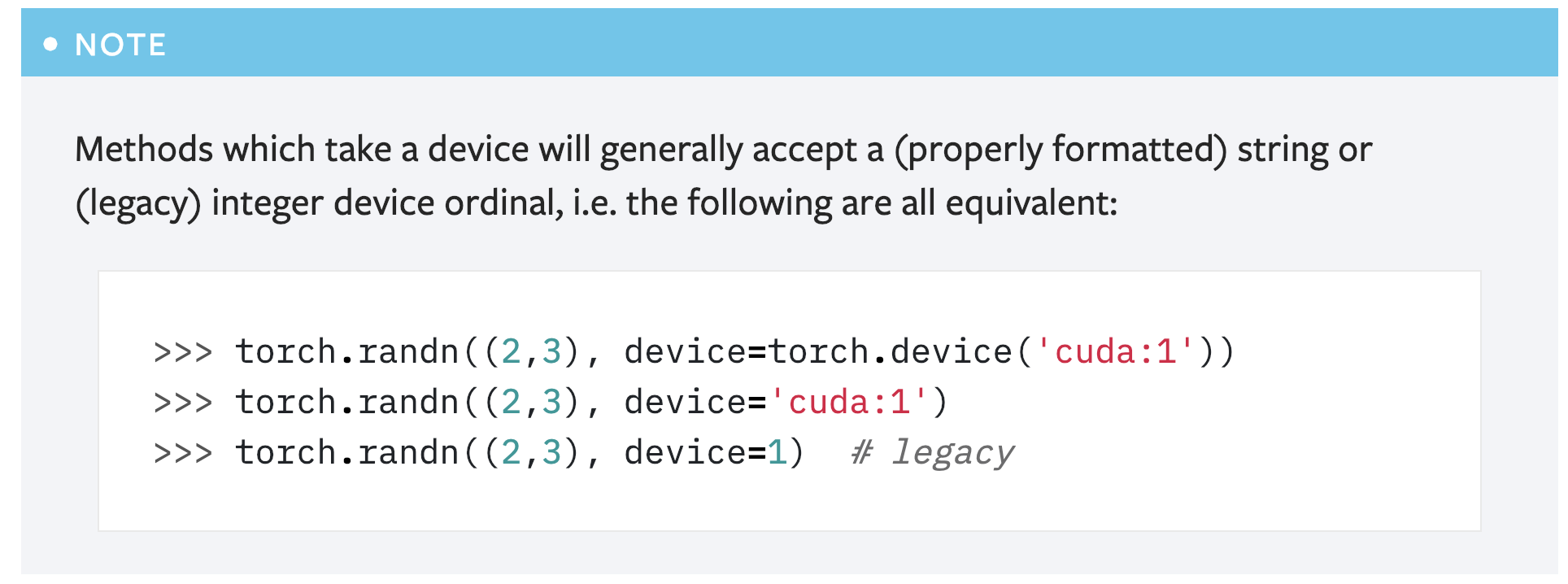

Summary:

This PR is a simple fix for the mistake in the first note for `torch.device` in the "tensor attributes" doc.

```

>>> # You can substitute the torch.device with a string

>>> torch.randn((2,3), 'cuda:1')

```

Above code will cause error like below:

```

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-53-abdfafb67ab1> in <module>()

----> 1 torch.randn((2,3), 'cuda:1')

TypeError: randn() received an invalid combination of arguments - got (tuple, str), but expected one of:

* (tuple of ints size, torch.Generator generator, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

* (tuple of ints size, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

```

Simply adding the argument name `device` solves the problem: `torch.randn((2,3), device='cuda:1')`.

However, another concern is that this note seems redundant as **there is already another note covering this usage**:

So maybe it's better to just remove this note?

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16839

Reviewed By: ezyang

Differential Revision: D13989209

Pulled By: gchanan

fbshipit-source-id: ac255d52528da053ebfed18125ee6b857865ccaf

|

|

Summary:

Some batched updates:

1. bool is a type now

2. Early returns are allowed now

3. The beginning of an FAQ section with some guidance on the best way to do GPU training + CPU inference

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16866

Differential Revision: D13996729

Pulled By: suo

fbshipit-source-id: 3b884fd3a4c9632c9697d8f1a5a0e768fc918916

|

|

Summary: fixes #16141

Differential Revision: D13868539

Pulled By: ailzhang

fbshipit-source-id: 03e858d0aff7804c5e9e03a8666f42fd12836ef2

|

|

Summary:

Now that https://github.com/pytorch/pytorch/pull/15587 has landed, updating docs.

Will close https://github.com/pytorch/pytorch/issues/15278

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16380

Differential Revision: D13825221

Pulled By: eellison

fbshipit-source-id: c5a7a7fbb40ba7be46a80760862468f2c9967169

|

|

Summary:

Relates to this issue https://github.com/pytorch/pytorch/issues/16288

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16324

Reviewed By: ezyang

Differential Revision: D13805412

Pulled By: suo

fbshipit-source-id: 8b80f988262da2c717452a71142327bbc23d1b8f

|

|

Summary:

- probabilty -> probability

- make long lines break

- Add LogitRelaxedBernoulli in distribution.rst

Pull Request resolved: https://github.com/pytorch/pytorch/pull/16136

Differential Revision: D13780406

Pulled By: soumith

fbshipit-source-id: 54beb975eb18c7d67779a9631dacf7d1461a6b32

|

|

Summary:

Addresses #15968

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15985

Differential Revision: D13649916

Pulled By: soumith

fbshipit-source-id: a207aea5709a79dba7a6fc541d0a70103f49efff

|

|

Summary:

Fixes #15700 .

Changelog:

- Expose torch.*.is_floating_point to docs

Differential Revision: D13580734

Pulled By: zou3519

fbshipit-source-id: 76edb4af666c08237091a2cebf53d9ba5e6c8909

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/15628

Differential Revision: D13562685

Pulled By: soumith

fbshipit-source-id: 1621fcff465b029142313f717035e935e9159513

|

|

Summary:

Now that `cuda.get/set_rng_state` accept `device` objects, the default value should be an device object, and doc should mention so.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/14324

Reviewed By: ezyang

Differential Revision: D13528707

Pulled By: soumith

fbshipit-source-id: 32fdac467dfea6d5b96b7e2a42dc8cfd42ba11ee

|

|

Summary:

Fixes https://github.com/pytorch/pytorch/issues/15062

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15112

Differential Revision: D13547845

Pulled By: soumith

fbshipit-source-id: 61e3e6c6b0f6b6b3d571bee02db2938ea9698c99

|

|

Summary:

https://github.com/pytorch/pytorch/pull/14710 with test fixed.

Also added `finfo.min` and `iinfo.min` to get castable tensors.

cc soumith

Pull Request resolved: https://github.com/pytorch/pytorch/pull/15046

Reviewed By: soumith

Differential Revision: D13429388

Pulled By: SsnL

fbshipit-source-id: 9a08004419c83bc5ef51d03b6df3961a9f5dbf47

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/15512

Differential Revision: D13545775

Pulled By: soumith

fbshipit-source-id: 2a8896571745630cff4aaf3d5469ef646bdcddb4

|

|

Summary: Closes: https://github.com/pytorch/pytorch/issues/15060

Differential Revision: D13528014

Pulled By: ezyang

fbshipit-source-id: 5a18689a4c5638d92f9390c91517f741e5396293

|