| Age | Commit message (Collapse) | Author | Files | Lines |

|---|

|

Summary:

This is not a functional change but a typo fix where I forgot to update the link to windows_smoke_tests.csv in test_python_first_shard. The windows_smoke_tests.csv is currently the same in pytorch/test-infra and my fork, janeyx99/test-infra, but that will not be the case in the future.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62042

Reviewed By: seemethere

Differential Revision: D29851984

Pulled By: janeyx99

fbshipit-source-id: 9bafdf0ba006b9128463e3cf132fdfcddd3d10f2

|

|

Summary:

Being able to download the .ninja_log allows for better debugging. There may be a follow-up PR to convert this to a better tracefile.

This PR only handles windows as it is already handled for linux here:

https://github.com/pytorch/pytorch/blob/master/.jenkins/pytorch/build.sh#L248-L252

Pull Request resolved: https://github.com/pytorch/pytorch/pull/62035

Test Plan: Check the artifacts for a windows job and see if we see .ninja_log

Reviewed By: malfet

Differential Revision: D29852228

Pulled By: janeyx99

fbshipit-source-id: a3a87b709cd0c84f5b3cdc274ac4a623771c2b5c

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61903

### Remaining Tasks

- [ ] Collate results of benchmarks on two Intel Xeon machines (with & without CUDA, to check if CPU throttling causes issues with GPUs) - make graphs, including Roofline model plots (Intel Advisor can't make them with libgomp, though, but with Intel OpenMP).

### Summary

1. This draft PR produces binaries with with 3 types of ATen kernels - default, AVX2, AVX512 . Using the environment variable `ATEN_AVX512_256=TRUE` also results in 3 types of kernels, but the compiler can use 32 ymm registers for AVX2, instead of the default 16. ATen kernels for `CPU_CAPABILITY_AVX` have been removed.

2. `nansum` is not using AVX512 kernel right now, as it has poorer accuracy for Float16, than does AVX2 or DEFAULT, whose respective accuracies aren't very good either (#59415).

It was more convenient to disable AVX512 dispatch for all dtypes of `nansum` for now.

3. On Windows , ATen Quantized AVX512 kernels are not being used, as quantization tests are flaky. If `--continue-through-failure` is used, then `test_compare_model_outputs_functional_static` fails. But if this test is skipped, `test_compare_model_outputs_conv_static` fails. If both these tests are skipped, then a third one fails. These are hard to debug right now due to not having access to a Windows machine with AVX512 support, so it was more convenient to disable AVX512 dispatch of all ATen Quantized kernels on Windows for now.

4. One test is currently being skipped -

[test_lstm` in `quantization.bc](https://github.com/pytorch/pytorch/issues/59098) - It fails only on Cascade Lake machines, irrespective of the `ATEN_CPU_CAPABILITY` used, because FBGEMM uses `AVX512_VNNI` on machines that support it. The value of `reduce_range` should be used as `False` on such machines.

The list of the changes is at https://gist.github.com/imaginary-person/4b4fda660534f0493bf9573d511a878d.

Credits to ezyang for proposing `AVX512_256` - these use AVX2 intrinsics but benefit from 32 registers, instead of the 16 ymm registers that AVX2 uses.

Credits to limo1996 for the initial proposal, and for optimizing `hsub_pd` & `hadd_pd`, which didn't have direct AVX512 equivalents, and are being used in some kernels. He also refactored `vec/functional.h` to remove duplicated code.

Credits to quickwritereader for helping fix 4 failing complex multiplication & division tests.

### Testing

1. `vec_test_all_types` was modified to test basic AVX512 support, as tests already existed for AVX2.

Only one test had to be modified, as it was hardcoded for AVX2.

2. `pytorch_linux_bionic_py3_8_gcc9_coverage_test1` & `pytorch_linux_bionic_py3_8_gcc9_coverage_test2` are now using `linux.2xlarge` instances, as they support AVX512. They were used for testing AVX512 kernels, as AVX512 kernels are being used by default in both of the CI checks. Windows CI checks had already been using machines with AVX512 support.

### Would the downclocking caused by AVX512 pose an issue?

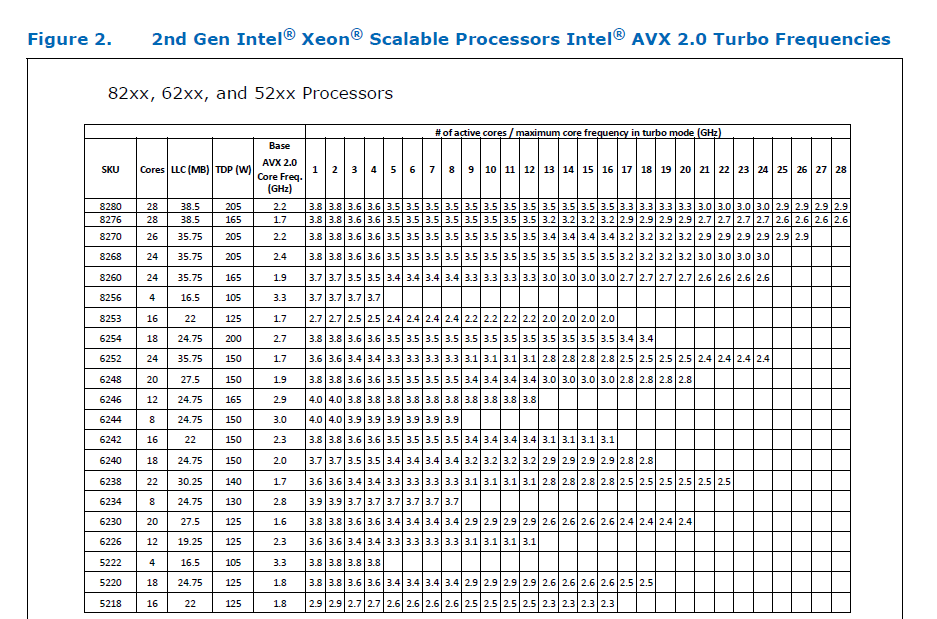

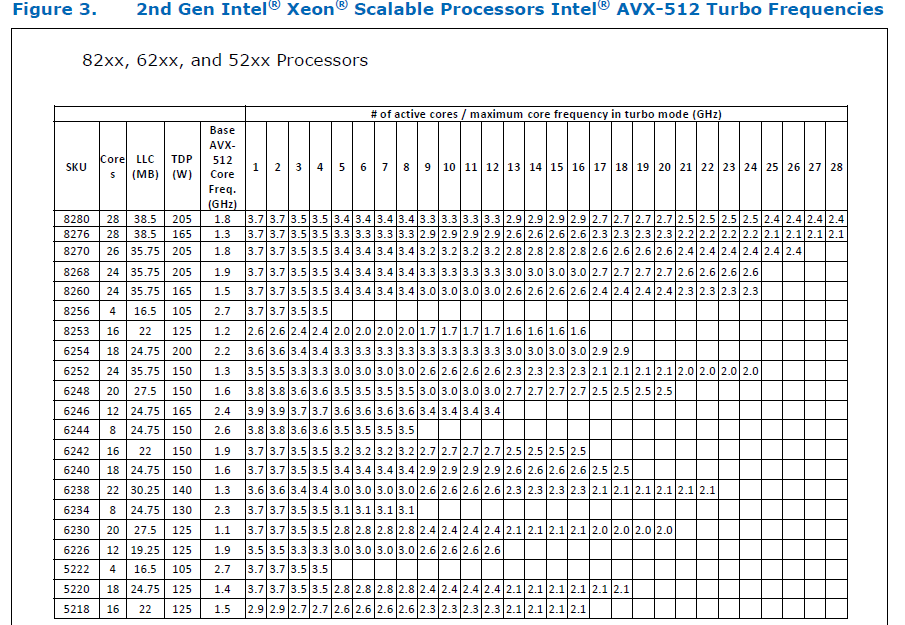

I think it's important to note that AVX2 causes downclocking as well, and the additional downclocking caused by AVX512 may not hamper performance on some Skylake machines & beyond, because of the double vector-size. I think that [this post with verifiable references is a must-read](https://community.intel.com/t5/Software-Tuning-Performance/Unexpected-power-vs-cores-profile-for-MKL-kernels-on-modern-Xeon/m-p/1133869/highlight/true#M6450). Also, AVX512 would _probably not_ hurt performance on a high-end machine, [but measurements are recommended](https://lemire.me/blog/2018/09/07/avx-512-when-and-how-to-use-these-new-instructions/). In case it does, `ATEN_AVX512_256=TRUE` can be used for building PyTorch, as AVX2 can then use 32 ymm registers instead of the default 16. [FBGEMM uses `AVX512_256` only on Xeon D processors](https://github.com/pytorch/FBGEMM/pull/209), which are said to have poor AVX512 performance.

This [official data](https://www.intel.com/content/dam/www/public/us/en/documents/specification-updates/xeon-scalable-spec-update.pdf) is for the Intel Skylake family, and the first link helps understand its significance. Cascade Lake & Ice Lake SP Xeon processors are said to be even better when it comes to AVX512 performance.

Here is the corresponding data for [Cascade Lake](https://cdrdv2.intel.com/v1/dl/getContent/338848) -

The corresponding data isn't publicly available for Intel Xeon SP 3rd gen (Ice Lake SP), but [Intel mentioned that the 3rd gen has frequency improvements pertaining to AVX512](https://newsroom.intel.com/wp-content/uploads/sites/11/2021/04/3rd-Gen-Intel-Xeon-Scalable-Platform-Press-Presentation-281884.pdf). Ice Lake SP machines also have 48 KB L1D caches, so that's another reason for AVX512 performance to be better on them.

### Is PyTorch always faster with AVX512?

No, but then PyTorch is not always faster with AVX2 either. Please refer to #60202. The benefit from vectorization is apparent with with small tensors that fit in caches or in kernels that are more compute heavy. For instance, AVX512 or AVX2 would yield no benefit for adding two 64 MB tensors, but adding two 1 MB tensors would do well with AVX2, and even more so with AVX512.

It seems that memory-bound computations, such as adding two 64 MB tensors can be slow with vectorization (depending upon the number of threads used), as the effects of downclocking can then be observed.

Original pull request: https://github.com/pytorch/pytorch/pull/56992

Reviewed By: soulitzer

Differential Revision: D29266289

Pulled By: ezyang

fbshipit-source-id: 2d5e8d1c2307252f22423bbc14f136c67c3e6184

|

|

Summary:

Previous testing yielded the torch.version ModuleNotFound error when I ran the smoke tests from the pytorch root directory.

This PR simply reorders the commands to run the smoke tests within the test directory, which passes in this series of runs:

https://github.com/seemethere/test-repo/actions/runs/1050734298 (the failures are due to missing credentials during uploading stats, which we don't need here)

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61967

Reviewed By: samestep

Differential Revision: D29820985

Pulled By: janeyx99

fbshipit-source-id: 363ef321c32cfaf4446ceeb6117ea26abc311816

|

|

(#61808)

Summary:

There're a few convoluted logic here to fix the `benchmarks`'s import module for pytest.

- On one hand, if we want to use `tools.stats.scribe` from `benchmarks`, we will need to add `benchmarks/__init__.py`

- On the other hand, if we add `benchmarks/__init__.py`, it breaks how `pytest` is working on searching what is the system built `torch` instead of the local source module `../torch`

- That's why we are seeing errors like

```

ImportError while loading conftest '/var/lib/jenkins/workspace/benchmarks/fastrnns/conftest.py'.

benchmarks/fastrnns/__init__.py:1: in <module>

from .cells import * # noqa: F403

benchmarks/fastrnns/cells.py:1: in <module>

import torch

torch/__init__.py:29: in <module>

from .torch_version import __version__ as __version__

torch/torch_version.py:9: in <module>

from .version import __version__ as internal_version

E ModuleNotFoundError: No module named 'torch.version'

```

Instead, this PR changed the usage of `upload_scribe.py` back to its original form using HTTP request, and only circleci for now will continue the this path using the `python benchmarks/upload_scribe.py`, which is gated by `if [[ -z "${GITHUB_ACTIONS}" ]];`

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61808

Reviewed By: seemethere

Differential Revision: D29750188

Pulled By: zhouzhuojie

fbshipit-source-id: 3b842b21978f2159001e9c6c1cdc96c5a0515f2e

|

|

Summary:

revert the revert of 3624d75 with additional fix in https://github.com/pytorch/pytorch/pull/61764

Got the corrent logs sent to lambda

```

...

,"21721":"OK","21722":"OK","21723":"OK","21724":"OK","21725":"OK","21726":"OK","21727":"OK","21728":"OK","21729":"OK","21730":"OK","21731":"OK","21732":"OK","21733":"OK","21734":"OK","21735":"OK","21736":"OK","21737":"OK","21738":"OK","21739":"OK","21740":"OK","21741":"OK","21742":"OK","21743":"OK","21744":"OK","21745":"OK","21746":"OK","21747":"OK","21748":"OK","21749":"OK","21750":"OK","21751":"OK","21752":"OK","21753":"OK","21754":"OK","21755":"OK","21756":"OK","21757":"OK","21758":"OK","21759":"OK","21760":"OK","21761":"OK","21762":"OK","21763":"OK","21764":"OK","21765":"OK","21766":"OK","21767":"OK","21768":"OK","21769":"OK","21770":"OK","21771":"OK","21772":"OK","21773":"OK","21774":"OK","21775":"OK","21776":"OK","21777":"OK","21778":"OK","21779":"OK","21780":"OK","21781":"OK","21782":"OK","21783":"OK","21784":"OK","21785":"OK","21786":"OK","21787":"OK","21788":"OK","21789":"OK","21790":"OK","21791":"OK","21792":"OK","21793":"OK","21794":"OK","21795":"OK","21796":"OK","21797":"OK","21798":"OK","21799":"OK","21800":"OK","21801":"OK","21802":"OK","21803":"OK","21804":"OK","21805":"OK","21806":"OK","21807":"OK","21808":"OK","21809":"OK","21810":"OK","21811":"OK","21812":"OK","21813":"OK","21814":"OK","21815":"OK","21816":"OK","21817":"OK","21818":"OK","21819":"OK","21820":"OK","21821":"OK","21822":"OK","21823":"OK","21824":"OK","21825":"OK","21826":"OK"}}

class StartProcessesTest:

tests: 14 failed: 0 skipped: 0 errored: 0

run_time: 4.86 seconds

avg_time: 0.35 seconds

median_time: 0.01 seconds

3 longest tests:

test_function_large_ret_val time: 1.55 seconds

test_pcontext_wait time: 1.11 seconds

test_void_function time: 1.03 seconds

...

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61768

Reviewed By: janeyx99

Differential Revision: D29735781

Pulled By: zhouzhuojie

fbshipit-source-id: 6882e334f5108d20773ad66d5300cd37eb509ded

|

|

Summary:

Fixes macos build error in master, recently mkl had a upgrade.

CircleCI error:

https://app.circleci.com/pipelines/github/pytorch/pytorch/351645/workflows/d22421c1-bb8f-48fd-9efd-7c0d77f0b083/jobs/14815607

```

Jul 16 11:43:05 CMake Error at /Users/distiller/workspace/miniconda3/lib/cmake/mkl/MKLConfig.cmake:456 (list):

Jul 16 11:43:05 list does not recognize sub-command PREPEND

Jul 16 11:43:05 Call Stack (most recent call first):

Jul 16 11:43:05 /Users/distiller/workspace/miniconda3/lib/python3.7/site-packages/torch/share/cmake/Caffe2/public/mkl.cmake:1 (find_package)

Jul 16 11:43:05 /Users/distiller/workspace/miniconda3/lib/python3.7/site-packages/torch/share/cmake/Caffe2/Caffe2Config.cmake:109 (include)

Jul 16 11:43:05 /Users/distiller/workspace/miniconda3/lib/python3.7/site-packages/torch/share/cmake/Torch/TorchConfig.cmake:68 (find_package)

Jul 16 11:43:05 CMakeLists.txt:5 (find_package)

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61773

Reviewed By: soulitzer

Differential Revision: D29736742

Pulled By: zhouzhuojie

fbshipit-source-id: 68c5244196f7f7562a6c202157c4ccdcfcb64337

|

|

Summary:

- [x] add the jobs to the matrix

- [x] `jit_legacy`

- [x] `nogpu_NO_AVX`

- [x] `nogpu_NO_AVX2`

- [x] `slow`

- [x] use the test config properly to enable the different test conditions

- [x] validate that it works

- [x] disable on pull requests before merging

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61055

Test Plan: CI. Example run: https://github.com/pytorch/pytorch/actions/runs/1013240987

Reviewed By: walterddr

Differential Revision: D29594080

Pulled By: samestep

fbshipit-source-id: 02c531ebc42feae81ecaea0785915f95e0f53ed7

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61005

build-results have the potential to be tainted between jobs since runs

are not ephemeral

Signed-off-by: Eli Uriegas <seemethere101@gmail.com>

Test Plan: Imported from OSS

Reviewed By: VitalyFedyunin

Differential Revision: D29526747

Pulled By: seemethere

fbshipit-source-id: f8c5bc5f647b771a059cbe380d694ce6dc535ae4

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61311

Pull Request resolved: https://github.com/pytorch/pytorch/pull/61152

Some related docs about `submodule.fetchJobs`

https://git-scm.com/docs/git-config#Documentation/git-config.txt-submodulefetchJobs

```

time git submodule update --init --recursive

________________________________________________________

Executed in 243.20 secs fish external

usr time 49.64 secs 213.00 micros 49.64 secs

sys time 29.27 secs 795.00 micros 29.27 secs

```

```

time git submodule update --init --recursive --jobs 4

________________________________________________________

Executed in 143.04 secs fish external

usr time 51.06 secs 246.00 micros 51.06 secs

sys time 30.96 secs 742.00 micros 30.96 secs

```

```

time git submodule update --init --recursive --jobs 8

________________________________________________________

Executed in 124.64 secs fish external

usr time 51.76 secs 264.00 micros 51.76 secs

sys time 30.49 secs 739.00 micros 30.49 secs

```

```

time git submodule update --init --recursive --jobs 0 # use all online cpus

________________________________________________________

Executed in 129.75 secs fish external

usr time 51.64 secs 181.00 micros 51.64 secs

sys time 31.49 secs 781.00 micros 31.49 secs

```

Test Plan: Imported from OSS

Reviewed By: 1ntEgr8

Differential Revision: D29560875

Pulled By: zhouzhuojie

fbshipit-source-id: 556027dffe744c66428075a8a1bf64683930aaaf

|

|

Summary:

Restores the ability of a user to call .jenkins/pytorch/build.sh while

also setting PYTORCH_ROCM_ARCH. Otherwise, with IN_CI=1 as the new

default, it will forcibly ignore user settings when build.sh is used

outside of CI.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60602

Reviewed By: samestep

Differential Revision: D29490791

Pulled By: janeyx99

fbshipit-source-id: b5e8a529b8e0b5020b260b4bf027a37e0c1df8d5

|

|

Summary:

This PR removes `torch/testing/_internal/expecttest.py` in favor of https://github.com/ezyang/expecttest. See also https://github.com/ezyang/ghstack/pull/71.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60658

Test Plan: CI.

Reviewed By: ezyang

Differential Revision: D29430763

Pulled By: samestep

fbshipit-source-id: b7cdc7ba37330176149fd465312118e2254ae92e

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/60204

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision: D29430679

Pulled By: samestep

fbshipit-source-id: 9380f5535cd370ec7aabf609a6170c8cb4df505d

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60742

improved CI_MASTER flag check logic, since it can be unset, true or false

Test Plan:

search for `PYTORCH_TEST_SKIP_CUDA_MEM_LEAK_CHECK` in logs below:

- Before adding ci/master:

- build workflow (`PYTORCH_TEST_SKIP_CUDA_MEM_LEAK_CHECK=1`): https://circleci.com/api/v1.1/project/github/pytorch/pytorch/14394913/output/107/0?file=true&allocation-id=60d5fd2fa55ae50282aec997-0-build%2F10295B30

- After adding ci/master label:

- build workflow (`PYTORCH_TEST_SKIP_CUDA_MEM_LEAK_CHECK=0`): https://circleci.com/api/v1.1/project/github/pytorch/pytorch/14398213/output/107/0?file=true&allocation-id=60d61cf8bb9d097afc7a11aa-0-build%2F400138F1

- master build workflow (`PYTORCH_TEST_SKIP_CUDA_MEM_LEAK_CHECK=0`): https://circleci.com/api/v1.1/project/github/pytorch/pytorch/14398198/output/107/0?file=true&allocation-id=60d61ca3467438480c963290-0-build%2F2999C909

Reviewed By: ngimel

Differential Revision: D29405732

Pulled By: walterddr

fbshipit-source-id: 09dd653cbb47ca61b1f8872851bda6db8db671b9

|

|

Summary:

After https://github.com/pytorch/pytorch/issues/60543 they are installed in the same folder as the rest of the tests

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60705

Reviewed By: driazati

Differential Revision: D29380670

Pulled By: malfet

fbshipit-source-id: a432d26c731e9220e00d8c800b1429b37d51655b

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60543

Since now c10d is part of libtorch, it would also be nice if the sources lived all in one place.

ghstack-source-id: 132306292

Test Plan: It builds

Reviewed By: cbalioglu

Differential Revision: D29062002

fbshipit-source-id: d9e1301e9d73e1643fa0f0119cd2d618f1ad52e6

|

|

Summary:

The reason I removed the smoke tests here were because we didn't have gflags on our GHA runners and we wanted to get sharding done sooner rather than later.

However, we shouldn't remove these tests for windows as they are important for debugging linker issues with torch. Thus, this is step 1 in adding the tests back.

Next step:

- add gflags to base ami

- remove the exist check

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60571

Test Plan: CI shouldn't break

Reviewed By: walterddr

Differential Revision: D29341850

Pulled By: janeyx99

fbshipit-source-id: 7e0c98887534d096f867e28a5482b32aa493b132

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/60407

Test Plan: Imported from OSS

Reviewed By: mrshenli

Differential Revision: D29278179

Pulled By: H-Huang

fbshipit-source-id: ee78085eeb04d81842c95236b8c3a33de7142a3a

|

|

Summary:

Adding windows CUDA smoke tests on PRs (master should run the full suite).

Next step:

- Automate data update so we get a new smoke test list without manual effort

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59686

Test Plan: https://github.com/pytorch/pytorch/actions/runs/958296267 The sharded smoke tests take long still because of dependencies installation

Reviewed By: walterddr

Differential Revision: D29243533

Pulled By: janeyx99

fbshipit-source-id: dde7ba127fa15c95bda0e833cc5311598fb85e2b

|

|

Summary:

JOB_BASE_NAME for test1 and test2 were removed by https://github.com/pytorch/pytorch/issues/60124. This caused the ROCm CI to run all tests for both test1 and test2. Restore the use of JOB_BASE_NAME.

Fixes https://github.com/pytorch/pytorch/issues/60377.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60409

Reviewed By: anjali411

Differential Revision: D29277560

Pulled By: walterddr

fbshipit-source-id: ddf01466492a9a626ce1b6adf87cd102d8f1fe35

|

|

Summary:

Enables sharding for Windows on CI. To make that possible, we currently remove the smoke tests tested in shard 1 which don't seem all that important as they are

1. tested on nightlies

2. seems to be tested anyway by running the test suite

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59970

Reviewed By: seemethere

Differential Revision: D29268484

Pulled By: janeyx99

fbshipit-source-id: 7f90d73037cfeb2c267b28714550316eb471b4dd

|

|

Summary:

`IS_PYTORCH_CI` and `IN_CI` are used randomly, however in some cases IN_CI is not currently set because it only exist in .circleci/scripts/setup_ci_environment.sh. This cleans up the 2 flags and only use IN_CI

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60279

Test Plan: CI

Reviewed By: seemethere

Differential Revision: D29239545

Pulled By: walterddr

fbshipit-source-id: a069424a2bb8790a3adfdaf0dc460301026bf8c7

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59466

Change saved parameter type from at::Tensor to at::IValue to support custom

class parameters, e.g. `__torch__.torch.classes.xnnpack.Conv2dOpContext`.

The NNC produced kernels won't deal with custom class parameters directly.

They simply pass through to the external operators that take these custom

class parameters, e.g. `prepacked::conv2d_clamp_run`.

It will reuse the `__getstate__` and `__setstate__` methods on the custom class

to persist and restore the state of the parameters.

When calling into the kernel, it will pass in the untyped raw pointer of the custom

class objects to the kernel as `void*`. It's similar to the regular tensor parameters,

for which it will pass in the raw data pointer of the tensor storage. The generated

kernel needs to hardcode the expected type for each parameter and cast before

calling the external ops.

ghstack-source-id: 131897904

Test Plan: - unit tests

Reviewed By: kimishpatel

Differential Revision: D28902496

fbshipit-source-id: 4b2c0895dd28f0b7d344aa08183d42ad6a355dae

|

|

Summary:

This is branch off of https://github.com/pytorch/pytorch/issues/59970 to only shard on linux so far (we're running in issues with windows gflags).

This would enable sharding of tests on a few Linux jobs on GHA, allowing tts to be essentially halved.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60124

Reviewed By: zou3519

Differential Revision: D29204211

Pulled By: janeyx99

fbshipit-source-id: 1cc31d1eccd564d96e2aef14c0acae96a3f0fcd0

|

|

Summary:

setting environment variable to only do cuda mem leak check on master CI jobs.

See discussion in https://github.com/pytorch/pytorch/pull/59402#issuecomment-860773034

See stats before/after disabling mem leak check: https://github.com/pytorch/pytorch/pull/59942#issuecomment-860947095

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60023

Test Plan:

https://github.com/pytorch/pytorch/issues/60108

https://github.com/pytorch/pytorch/issues/60116

Reviewed By: janeyx99

Differential Revision: D29164182

Pulled By: walterddr

fbshipit-source-id: dfe88c2c1275b6eb35f18b58aacdc220f34ccb59

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60110

file_diff_from_base is currently bugged for ghstack PRs since it fails

to find a merge base

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: driazati

Differential Revision: D29168767

Pulled By: seemethere

fbshipit-source-id: 580a909aa392541769cbbfdc6acce1e6c5d1c341

|

|

Test Plan: revert-hammer

Differential Revision:

D29148233 (https://github.com/pytorch/pytorch/commit/241aac3ef81358577df40a38348c6a5744bed317)

Original commit changeset: 7c8c1866f39c

fbshipit-source-id: f32c6c6decd737ef290d3e83c9d021475aabaab0

|

|

Summary:

I believe IN_PULL_REQUEST is unset for some GHA test runs because we don't also check GITHUB_HEAD_REF. This PR is a small fix for that.

Example: https://github.com/pytorch/pytorch/pull/60023/checks?check_run_id=2831813860 doesn't set it properly

Pull Request resolved: https://github.com/pytorch/pytorch/pull/60047

Reviewed By: walterddr

Differential Revision: D29148233

Pulled By: janeyx99

fbshipit-source-id: 7c8c1866f39ce8af8d13c34ddc0c5786a829321e

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/59989

Test Plan: Imported from OSS

Reviewed By: samestep

Differential Revision: D29138211

Pulled By: ailzhang

fbshipit-source-id: 349d307c510e7fad266822e320f0d6904fa00239

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/59888

Test Plan: Imported from OSS

Reviewed By: samestep

Differential Revision: D29114274

Pulled By: ailzhang

fbshipit-source-id: d2845c7fc95d038cd68c10e22b68be8ad3cae736

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59840

moving these tests to their own standalone file. No meaningful code changes.

ghstack-source-id: 131359162

Test Plan: CI

Reviewed By: cbalioglu

Differential Revision: D29012664

fbshipit-source-id: 348870016509a6ed7e69240fa82bccef4a12d674

|

|

Summary:

Python 3.6 EOL is end of this year--we should use newer Python in CI.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59729

Reviewed By: bdhirsh

Differential Revision: D29006807

Pulled By: janeyx99

fbshipit-source-id: c79214b02a72656058ba5d199141f8838212b3b6

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59826

It's only informational and will run on Windows CPU executors as well

Fixes issues found in https://github.com/pytorch/pytorch/runs/2797531966

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: janeyx99

Differential Revision: D29042951

Pulled By: seemethere

fbshipit-source-id: 862094e53417c0a59d7728bf680be37b806b5a6f

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59752

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: samestep

Differential Revision: D29009775

Pulled By: seemethere

fbshipit-source-id: 5be1b818b5653a4fdbfe4a79731317068dc1a5d1

|

|

Summary:

Fix incorrect logic in windows CPU build script

VERSION_SUFFIX shouldn't be cpu

https://github.com/pytorch/pytorch/pull/59618/checks?check_run_id=2771591019

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59619

Reviewed By: samestep

Differential Revision: D29000213

Pulled By: seemethere

fbshipit-source-id: fcc474967e281fbf9be69f14c0aedfd01820573f

|

|

Test Plan: revert-hammer

Differential Revision:

D28981443 (https://github.com/pytorch/pytorch/commit/21121675b3ebaf40738535dabf2e0bdf69670700)

Original commit changeset: 5d24cccfb8c8

fbshipit-source-id: 14e5b610978882bace2f834f61e5457f62b11290

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59678

This reverts commit 2956bbaf2388d424ef986c22fac8287f7c345978.

Reland of https://github.com/pytorch/pytorch/pull/58782

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: samestep

Differential Revision: D28981443

Pulled By: seemethere

fbshipit-source-id: 5d24cccfb8c87832fa0233d0b524575dc04f8f05

|

|

Test Plan: revert-hammer

Differential Revision:

D28645531 (https://github.com/pytorch/pytorch/commit/51884c647965d704bd25150784729f8449459264)

Original commit changeset: 6ed1a2dead9c

fbshipit-source-id: e082d7d50de77d0572596111e95a3da3a350a319

|

|

Summary:

Reland of https://github.com/pytorch/pytorch/issues/59487

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59649

Reviewed By: samestep

Differential Revision: D28970751

Pulled By: janeyx99

fbshipit-source-id: 6e28d4dcfdab8a49da4b6a02c57516b08bacd7b5

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58782

[skip ci]

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: samestep

Differential Revision: D28645531

Pulled By: seemethere

fbshipit-source-id: 6ed1a2dead9cca29e26e613afdbcf46ba7cee88c

|

|

run_test.py

Test Plan: revert-hammer

Differential Revision:

D28961233 (https://github.com/pytorch/pytorch/commit/a6c9483c2f65e35a01bd5649caf45903f3c6845d)

Original commit changeset: 6b7ddc6e6185

fbshipit-source-id: 4f8471df987a03d5c928a04f989d5d43f9cc47e9

|

|

Summary:

I suddenly find that `pip install ninja==1.9.0 ` failed in CI.

And I tested locally and on another colleague's machine.

It looks it conflicts with cmake installed in conda.

https://app.circleci.com/pipelines/github/pytorch/pytorch/332470/workflows/d8b6ed30-1c7e-4863-898a-7f067c6202e1/jobs/13972409

1.10.0 couldn't be installed either.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59625

Reviewed By: jbschlosser

Differential Revision: D28966699

Pulled By: seemethere

fbshipit-source-id: a1150e411ba3b4ab65448a087aa65f4ebe6c3596

|

|

Summary:

The run-specified-test-cases option would allow us to specify a list of test cases to run by having a CSV with minimally two columns: test_filename and test_case_name.

This PR also adds .json to some files we use for better clarity.

Usage:

`python test/run_test.py --run-specified-test-cases <csv_file>` where the csv file can look like:

```

test_filename,test_case_name,test_total_time,windows_only_failure_sha_count,total_sha_count,windows_failure_count,linux_failure_count,windows_total_count,linux_total_count

test_cuda,test_cudnn_multiple_threads_same_device,8068.8409659525,46,3768,53,0,2181,6750

test_utils,test_load_standalone,8308.8062920459,14,4630,65,0,2718,8729

test_ops,test_forward_mode_AD_acosh_cuda_complex128,91.652619369806,11,1971,26,1,1197,3825

test_ops,test_forward_mode_AD_acos_cuda_complex128,91.825633094915,11,1971,26,1,1197,3825

test_profiler,test_source,60.93786725749,9,4656,21,3,2742,8805

test_profiler,test_profiler_tracing,203.09352795241,9,4662,21,3,2737,8807

```

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59487

Test Plan:

Without specifying the option, everything should be as they were before.

Running `python test/run_test.py --run-specified-test-cases windows_smoke_tests.csv` resulted in this paste P420276949 (you can see internally). A snippet looks like:

```

(pytorch) janeyx@janeyx-mbp pytorch % python test/run_test.py --run-specified-test-cases windows_smoke_tests.csv

Loading specified test cases to run from windows_smoke_tests.csv.

Processed 28 test cases.

Running test_cpp_extensions_jit ... [2021-06-04 17:24:41.213644]

Executing ['/Users/janeyx/miniconda3/envs/pytorch/bin/python', 'test_cpp_extensions_jit.py', '-k', 'test_jit_cuda_archflags'] ... [2021-06-04 17:24:41.213781]

s

----------------------------------------------------------------------

Ran 1 test in 0.000s

OK (skipped=1)

...

```

With pytest, an example executable would be:

`Running test_dataloader ... [2021-06-04 17:37:57.643039]

Executing ['/Users/janeyx/miniconda3/envs/pytorch/bin/python', '-m', 'pytest', 'test_dataloader.py', '-v', '-k', 'test_segfault or test_timeout'] ... [2021-06-04 17:37:57.643327]`

Reviewed By: jbschlosser

Differential Revision: D28961233

Pulled By: janeyx99

fbshipit-source-id: 6b7ddc6e61856aa0002e1a0afc845770e4f8400b

|

|

Summary:

Partially addresses https://github.com/pytorch/pytorch/issues/55340

**Overview**

This factors out `FileStoreTest`, `HashStoreTest`, `PrefixFileStoreTest`, `TCPStoreTest`, `PrefixTCPStoreTest`, `PythonStoreTest`, `RendezvousTest`, `RendezvousEnvTest`, `RendezvousFileTest`, and `RendezvousTCPTest` from `test_c10d_common.py` to a new file `test_store.py`.

Additionally, unused import/initialization statements are removed from `test_c10d_common.py`, and the minimal set of import/initialization statements are used for `test_store.py`.

Also, this changes `.jenkins/pytorch/multigpu-test.sh`, `.jenkins/pytorch/win-test-helpers/test_distributed.bat`, and `test/run_test.py` to include the new `test_store.py`.

**Testing**

All commands shown are run on an AI AWS cluster.

I check the Store tests:

```

python test/distributed/test_store.py

```

I also check `test_c10d_common.py` since it is the source of the refactored code. In addition, I check `test_c10d_nccl.py` and `test_c10d_gloo.py` since they import from `test_c10d_common.py`; those two should be the only test files depending on `test_c10d_common.py`.

```

python test/distributed/test_c10d_common.py

python test/distributed/test_c10d_nccl.py

python test/distributed/test_c10d_gloo.py

```

`test_c10d_gloo.py` produces warnings about how using sparse tensors in TorchScript is experimental, but the warnings do not result from this PR's changes.

**Testing Issues** (To Be Revisited)

```

WORLD_SIZE=4 BACKEND=gloo gpurun pytest test/distributed/test_c10d_gloo.py

```

Running the above command fails three tests (written as `[Test]`: `[Error]`):

- `ProcessGroupGlooWrapperTest.test_collective_hang`: `RuntimeError: [../third_party/gloo/gloo/transport/tcp/pair.cc:598] Connection closed by peer [10.200.24.101]:15580`

- `CommTest.test_broadcast_coalesced_gloo_cuda`: `RuntimeError: cuda runtime error (3) : initialization error at ../aten/src/THC/THCGeneral.cpp:54`

- `CommTest.test_sequence_num_incremented_gloo_default`: `RuntimeError: cuda runtime error (3) : initialization error at ../aten/src/THC/THCGeneral.cpp:54`

However, running each of the following yields no errors:

```

WORLD_SIZE=4 BACKEND=gloo gpurun pytest test/distributed/test_c10d_gloo.py -k test_collective_hang

WORLD_SIZE=4 BACKEND=gloo gpurun pytest test/distributed/test_c10d_gloo.py -k test_broadcast_coalesced_gloo_cuda

WORLD_SIZE=4 BACKEND=gloo gpurun pytest test/distributed/test_c10d_gloo.py -k test_sequence_num_incremented_gloo_default

```

This suggests the existence of some inadvertent state dependency between tests (e.g. improper cleanup). I have not explored this further yet. In particular, I do not have a solid understanding of the tests to be able to explain why using `pytest` and `gpurun` induces the failure (since notably, running the `.py` directly shows no issue).

Similarly, running the following yields 47 errors:

```

WORLD_SIZE=4 BACKEND=nccl gpurun pytest test/distributed/test_c10d_nccl.py

```

The errors seem to all be simply complaining about the usage of `fork()` instead of `spawn()` for CUDA multiprocessing. Though, most of the tests in `test_c10d_nccl.py` ask for at least 2 CUDA devices, so I think that the `gpurun` is warranted (assuming that the test file does not need to be run partially on different machines).

Both `test_c10d_common.py` and `test_store.py` work fine with `pytest`.

**Other Notes**

I noticed that `torch.distributed` is imported both as `dist` and as `c10d` and that `c10d` is used throughout the Store tests. I was curious if this is intentional (as opposed to using `dist` to refer to `torch.distributed`). Also, the original [issue](https://github.com/pytorch/pytorch/issues/55340) suggests that the Store tests do not use multiprocessing, but I saw that `torch.multiprocessing` is still used in `TCPStoreTest`.

The links for the Store files in the `CONTRIBUTING.md` [file](https://github.com/pytorch/pytorch/blob/master/torch/distributed/CONTRIBUTING.md) are broken. This can fixed in a separate PR.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/59271

Reviewed By: jbschlosser, mrshenli

Differential Revision: D28856920

Pulled By: andwgu

fbshipit-source-id: 630950cba18d34e6b5de661f5a748f2cddc1b446

|

|

Summary: Pull Request resolved: https://github.com/pytorch/pytorch/pull/58872

Test Plan: verify tests running on CI as expected

Reviewed By: suo

Differential Revision: D28646660

fbshipit-source-id: eb7d784844fb7bc447b4232e2f1e479d4d5aa72f

|

|

Summary:

Fix is simple; alias inputs before feeding them to distinct

torchdeploy interpreters.

Signed-off-by: Edward Z. Yang <ezyang@fb.com>

Fixes https://github.com/pytorch/pytorch/issues/58832

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58871

Reviewed By: wconstab, zou3519

Differential Revision: D28646784

Pulled By: ezyang

fbshipit-source-id: 6d2850f3226b5b99468d1465723b421ce4d7ab89

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58832

While we investigate breakage.

Differential Revision:

D28631469

D28631469

Test Plan: Imported from OSS

Reviewed By: SplitInfinity

Pulled By: suo

fbshipit-source-id: 43d51c1c9d81e951074824ccf624e42f6bec4242

|

|

Summary:

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58199

Signed-off-by: Eli Uriegas <eliuriegas@fb.com>

Test Plan: Imported from OSS

Reviewed By: malfet

Differential Revision: D28465272

Pulled By: seemethere

fbshipit-source-id: d221ad71d160088883896e018c58800dae85ff2c

|

|

Summary:

Fixes #{issue number}

gunandrose4u

Pull Request resolved: https://github.com/pytorch/pytorch/pull/51936

Reviewed By: malfet

Differential Revision: D28467662

Pulled By: seemethere

fbshipit-source-id: 28d203ee3af13d6a3158f188c2e889e310ee6010

|

|

Summary:

Some machines don't have a versionless `python` on their PATH, which breaks these existing shebangs.

I'm assuming that all the existing versionless `python` shebangs are meant to be `python3` and not `python2`; please let me know if my assumption was incorrect for any of these.

Pull Request resolved: https://github.com/pytorch/pytorch/pull/58275

Test Plan: CI.

Reviewed By: zhouzhuojie

Differential Revision: D28428143

Pulled By: samestep

fbshipit-source-id: 6562be3d12924db72a92a0207b060ef740f61ebf

|